BUILDING ETHICAL AI THROUGH COLLECTIVE INTELLIGENCE: ADDRESSING THE TROLLEY PROBLEM IN SELF-DRIVING CARS

Author:

By Bid Oscar Hountondji, Arinze Ezirim, Fidelis Zumah, George Tetteh and Isaac BOAKYE ASIRIFI

Affiliations: Africa Collective Intelligence Lab(ACILAB)

We will have the honour of presenting this work at the conference ‘Collective Intelligence: forms, functions and evolution across species, societies, and industries’, a scientific discussion meeting organised by the at the , Rabat, Morocco from 15 to 17 May 2024, 09:00 - 17:00.

Learn more about the conference here:

Abstract

The introduction of Artificial Intelligence (AI) in self-driving cars (SDCs) introduces significant ethical challenges, particularly in decision-making scenarios involving life-and-death outcomes, such as those presented by the Trolley Problem. This study explores the use of collective intelligence (CI) to address these ethical issues, drawing insights from the MIT Moral Machine experiment. By leveraging global perspectives on ethical dilemmas, we employ machine learning algorithms, notably Decision Trees, to encode ethical guidelines in SDCs that align with a broad consensus of human ethical judgments. Our results indicate substantial global agreement on certain ethical decisions and reflect a preference for driving styles that prioritize passenger safety. However, they also reveal that the system may opt for maneuvers that slightly compromise passenger comfort to avoid greater risks to pedestrians. This research underscores the potential of CI to create universally acceptable ethical frameworks, to enhance public trust in AI and facilitate the development of AI systems that are both ethically aware and socially responsible.

Keywords: Self-Driving Cars, Collective Intelligence, AI Ethics, Decision Trees, Public Trust in AI

1. Introduction

As self-driving cars (SDCs) transition from theoretical innovation to real-world application, the ethical dilemmas inherent in their decision-making processes have come to the forefront of technological discourse [6]. Traditional ethical frameworks struggle to address the unique challenges posed by AI-driven vehicles, particularly in scenarios that resemble the Trolley Problem, a thought experiment that questions the morality of decision-making in life-and-death situations [3, 4]. The advent of SDCs equipped with AI necessitates a reevaluation of ethical guidelines to accommodate the split-second decisions these vehicles must make.

Collective Intelligence (CI) refers to the collective behavior and shared knowledge that emerges from collaboration and competition among many individuals. This approach harnesses the wisdom of a diverse group to reach decisions or solve problems more effectively than could be done by individuals alone. This article examines the application of collective intelligence (CI) principles as a novel approach to resolving these ethical concerns. By leveraging the MIT Moral Machine experiment—a platform that captures global perspectives on moral dilemmas faced by SDCs [1]—we illustrate how collective human judgment can inform and enhance the ethical programming of AI. This CI approach not only reflects a wider array of human values but also supports the development of SDC algorithms that are more aligned with societal expectations and ethical standards.

In exploring the integration of CI into AI ethics, we delve into the challenges of programming SDCs to navigate complex ethical landscapes, the role of machine learning in simulating ethical decision-making, and the potential for CI to guide the creation of adaptive, universally accepted ethical frameworks [2, 5]. This exploration is anchored in the belief that the collective wisdom of humanity can lead to more morally aware and socially responsible autonomous vehicles, marking a significant step forward in the ethical development of AI technologies.

The subsequent sections of this paper are dedicated to the background of the study, the methodology employed, the results obtained, the discussion and future considerations.

2. Background and Context

This section presents the theoretical underpinnings of the Trolley Problem as it relates to ethical decision-making in AI. We explore historical and contemporary approaches to AI ethics, highlighting the significance of integrating human ethical judgments into AI systems. The MIT Moral Machine experiment is introduced as a pivotal study that captures global ethical judgments and informs our methodological approach.

2.1 The Trolley Problem Revisited

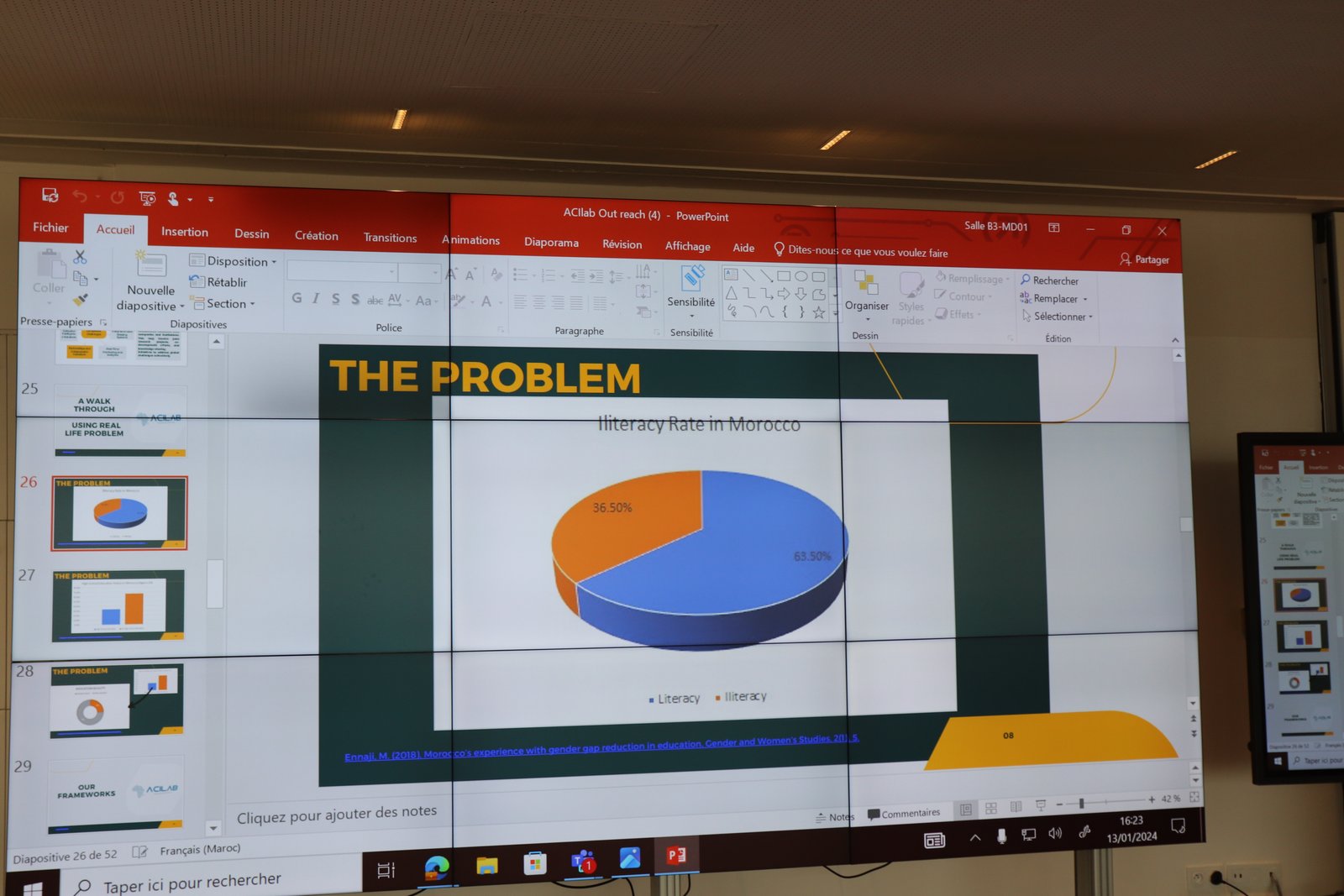

The Trolley Problem, a thought experiment that has intrigued ethicists for more than a century, poses a moral dilemma about decision-making in life-and-death situations. Originally framed by Philippa Foot in 1967 [3, 4], it challenges individuals with a hypothetical scenario: a runaway trolley is barreling down a track towards five unsuspecting people. You are standing next to a lever that can divert the trolley onto another track, where only one person stands. The dilemma asks whether it is more ethical to pull the lever, sacrificing one life to save five, or do nothing and allow the trolley to kill the five people.

Image 1: The Trolley Problem

When this problem is revisited in the context of Self-Driving Cars (SDCs), it transforms from a theoretical dilemma into a real and pressing issue. SDCs, equipped with Artificial Intelligence (AI), must be programmed to make split-second decisions that could result in saving lives or causing a tragedy [1, 2, 6]. This modern iteration of the Trolley Problem underscores the urgent need for robust ethical guidelines in AI decision-making processes.

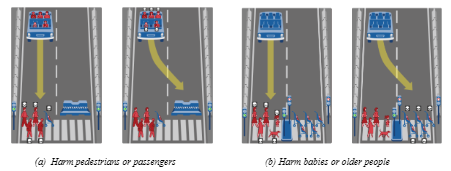

Image 2: The Trolley Problem revisited in the context of Self-Driving Cars (SDCs)

The core of the dilemma for SDCs lies in programming the AI to evaluate complex scenarios it may encounter on the road. Should the AI prioritize the lives of its passengers, the pedestrians, or attempt to minimize overall harm? This question becomes even more complicated when considering variables such as the number of people involved, their ages, and other factors that could influence the perceived value of different lives. Yet, this decision is hard to make for humans, we can imagine how much harder it can be for AI agents.

To address these challenges, a blend of utilitarian and deontological ethics is often proposed [2, 6, 7]. Utilitarianism suggests that the best action is the one that maximizes overall happiness or well-being, essentially arguing for the choice that saves the most lives. In contrast, deontological ethics focuses on rules, duties, and rights, suggesting that certain actions are inherently right or wrong, regardless of their outcomes. For instance, a deontological approach might argue against actively causing harm, even if doing so would save a greater number of lives.

Incorporating these ethical theories into AI decision-making requires a nuanced approach. It involves programming not just for the quantifiable aspects of scenarios (e.g., the number of lives saved) but also for principles that respect individual rights and the intrinsic value of all human lives. The development of such ethical guidelines for SDCs represents a critical intersection of technology, philosophy, and law. It demands a collaborative effort among ethicists, engineers, legal experts, and the public to navigate these complex moral landscapes [1, 6]. This is where Collective Intelligence Principes come in to find a solution to The Trolley Problem, revisited in the era of SDCs.

Image 3: Integrating CI, Utilitarian and Deontological Ethics in AI Decision-Making for SDCs

2.1 The MIT Moral Machine Experiment

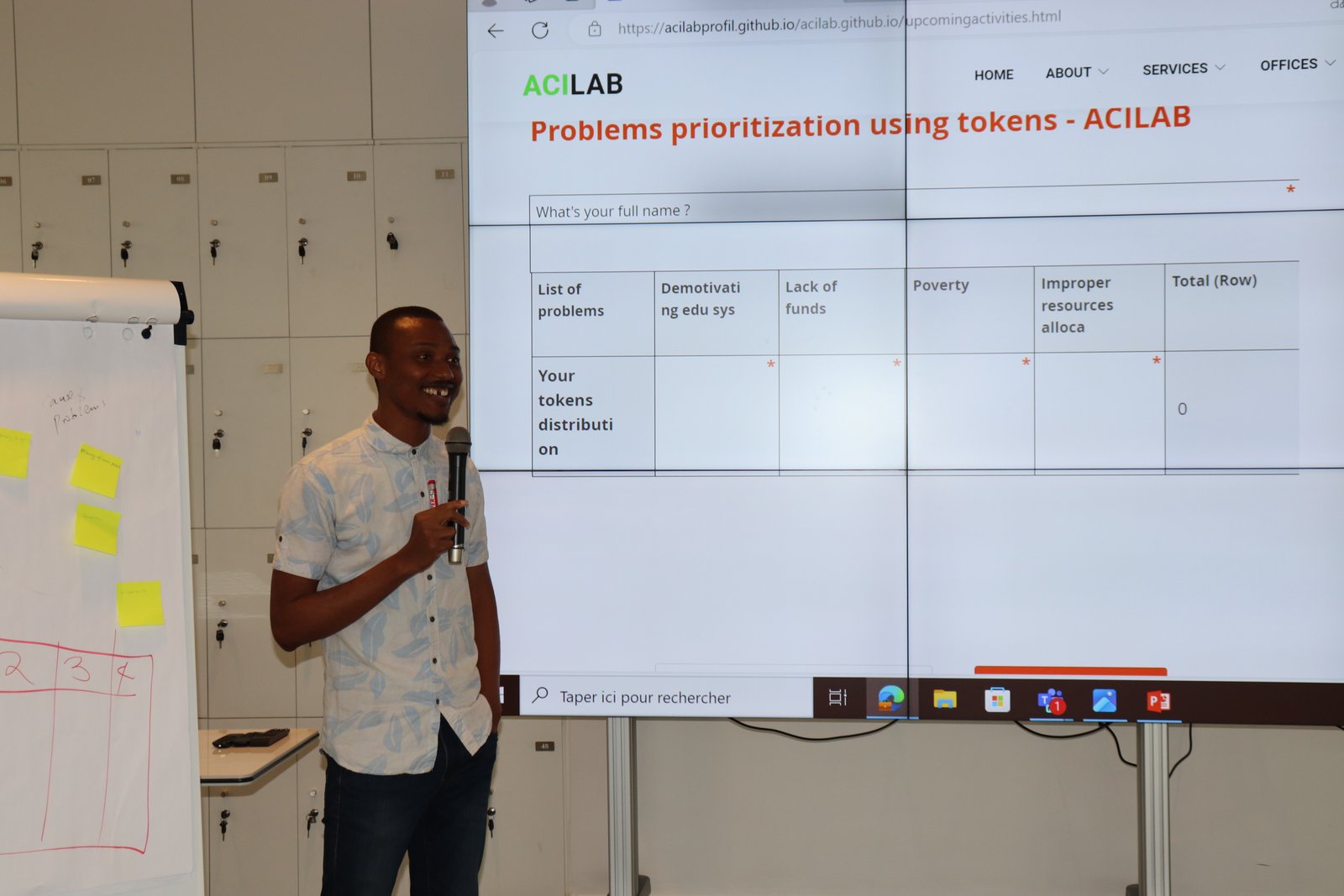

represents a pioneering effort to harness global perspectives on the intricate moral decisions faced by Self-Driving Cars (SDCs). Developed by researchers at the Massachusetts Institute of Technology, this online platform was designed to explore how humans believe machines should resolve ethical dilemmas that involve life-and-death decisions. At its core, the Moral Machine experiment seeks to understand the collective human judgment on these pressing ethical issues, providing invaluable insights into public ethical intuitions and preferences.

The platform operates by presenting users with a series of moral dilemmas, each scenario depicting a situation where an autonomous vehicle is faced with a no-win situation. For instance, a scenario might involve an SDC that must decide between continuing on its path, leading to the death of a group of pedestrians who are jaywalking, or swerving to avoid them, potentially harming the passengers inside the vehicle. These scenarios are carefully crafted to cover a wide range of variables, including the number of people involved, their ages, genders, and even species (for example, choosing between humans and pets). The results are then aggregated to reveal trends and patterns in how different cultures, age groups, and demographics think about these moral quandaries.

Image 4: Two scenarios from the MIT Moral Machine Experiment Platform

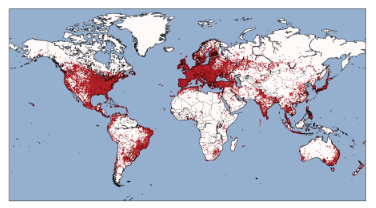

2.3 Global Participation and the Power of Collective Intelligence

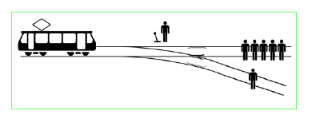

The Moral Machine Experiment leverages Collective Intelligence (CI), drawing from the collaborative efforts and judgments of individuals from varied cultural, geographical, and demographic backgrounds. The platform gathered 40 million decisions in ten languages from millions of people in 233 countries and territories.

Image 5: World map highlighting the locations of Moral Machine visitors. Each point represents a location from which at least one visitor made at least one decision (n = 39.6 million). The numbers of visitors or decisions from each location are not represented. [1]

This global participation enriches the dataset with a broad spectrum of moral and ethical perspectives, recognizing the universal challenge that moral dilemmas in autonomous vehicles represent [1, 6]. The collective data, analyzed to reveal patterns and preferences, provide valuable insights into societal norms and ethical expectations across cultures, helping to inform the development of ethical guidelines for AI systems in SDCs.

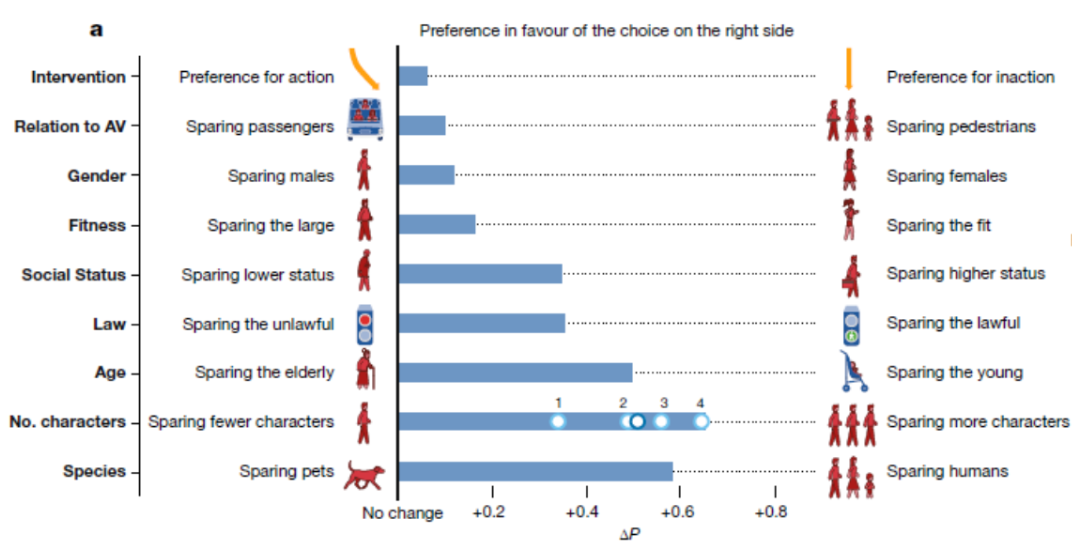

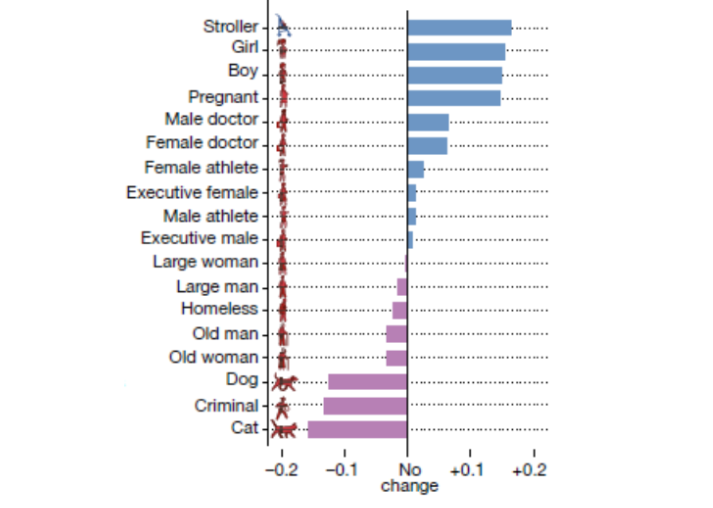

Image 6: Global preferences. AMCE (average marginal component effect) for each preference. In each row, ΔP is the difference between the probability of sparing characters possessing the attribute on the right, and the probability of sparing characters possessing the attribute on the left, aggregated over all other attributes. For example, for the attribute age, the probability of sparing young characters is 0.49 (s.e. = 0.0008) greater than the probability of sparing older characters. The 95% confidence intervals of the means are omitted owing to their insignificant width, given the sample size (n = 35.2 million). For the number of characters (No. characters), effect sizes are shown for each number of additional characters (1 to 4; n1 = 1.52 million, n2 = 1.52 million, n3 = 1.52 million, n4 = 1.53 million); the effect size for two additional characters overlaps with the mean effect of the attribute. AV, autonomous vehicle.[1]

Image 7: Relative advantage or penalty for each character, compared to an adult man or woman. For each character, ΔP is the difference the between the probability of sparing this character (when presented alone) and the probability of sparing one adult man or woman (n = 1 million). For example, the probability of sparing a girl is 0.15 (s.e. = 0.003) higher than the probability of sparing an adult man or woman.[1]

The Moral Machine's contribution plays a pivotal role in the ongoing debate on programming SDCs to make ethical decisions. The aggregation of global judgments offers unique insights that can guide policymakers, technologists, and ethicists in creating AI algorithms that reflect human values. Additionally, the experiment has sparked significant public discourse on machine ethics, serving both as a research tool and an educational platform to raise awareness about the ethical challenges posed by autonomous technologies.

Image 5: Global participation and CI in the Moral Machine Experiment

3. Methodology

We detail our approach to using CI for ethical decision-making in SDCs. The insights derived from the Moral Machine provide a robust dataset for creating machine learning models that can guide SDCs in making decisions that reflect human ethical standards [2, 5]. For instance, by analyzing scenarios where global participants decide between harming pedestrians or passengers, we can develop algorithms that prioritize actions based on universally accepted moral principles.

Machine learning algorithms, particularly clustering, regression, classification, reinforcement learning, and deep learning, offer sophisticated techniques for processing complex datasets and making predictions or decisions based on data. These algorithms can be adapted to address various needs of an SDC’s autopilot system, enabling it to respond effectively to real-world situations.

3.1 Data

The data for this study was derived from scenarios generated by the Moral Machine experiment, which presented a series of moral dilemmas to participants. The scenarios specifically involved decision-making processes where an SDC (Self-Driving Car) had to choose between two actions, swerving in the left lane or staying in the right lane, both leading to a potentially harmful outcome (harm the passengers or harm the pedestrians). These scenarios are translated into a dataset incorporating four thousands of rows (scenarios) and four main features indicative of security levels in two possible lane decisions. Features included were:

f1 (Security on the right lane): High values suggest a clear and secure lane, encouraging the SDC to continue on its current path. (Implying security level for the passengers)

f2 (Pedestrian risk on the right lane): Lower values indicate a higher risk of pedestrian presence, necessitating careful consideration of a lane change.

f3 (Security on the left lane): High values indicate that swerving to the left would lead to a safer path. (Implying security level for the passengers)

f4 (Pedestrian risk in the left lane): Lower values denote significant pedestrian risk in the left lane, which could deter swerving.

The dataset also contains the column with the decision made (stay or swerve) for each scenario reviewed by the participants.

3.2 Model Implementation

A Decision Tree classifier was utilized to create a predictive model for the SDC's decision-making process. The tree was structured to evaluate scenarios based on features that described the level of security or risk associated with staying in the current lane or swerving. The Decision Tree was trained using Python's scikit-learn library. Model parameters, such as the depth of the tree and the criteria for splitting nodes (Gini impurity), were optimized based on the performance on a validation set.

The tree's decisions were then mapped back to human-understandable rules, representing the ethical decisions encoded by the model.

4. Results

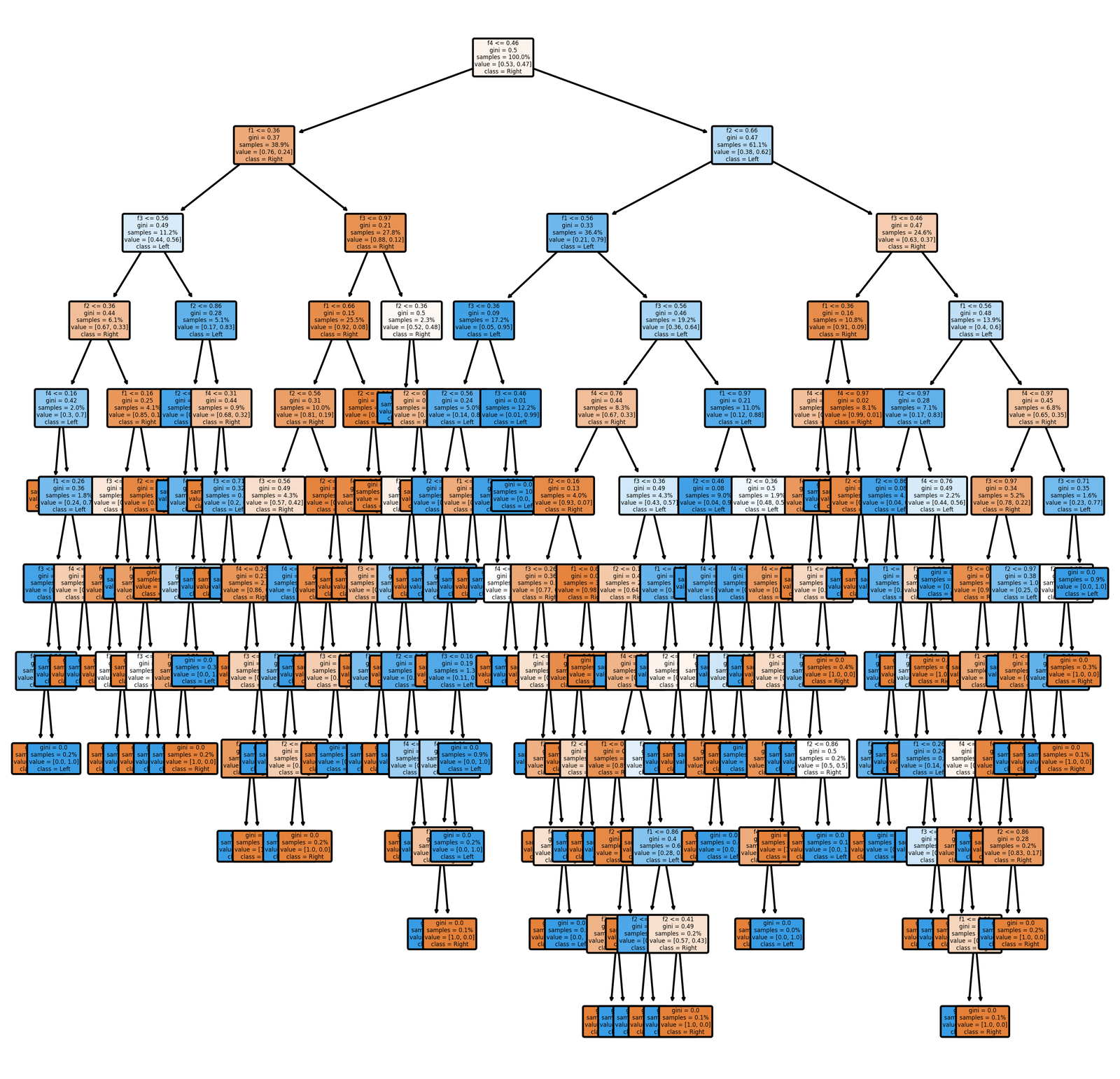

Figure 1: The Decision Tree

4.1 Ethical Rules Generated

The decision tree considered in this analysis comprises 128 leaf nodes. A leaf node is a terminal node in the decision tree that contains a final decision or prediction. In the context of ethical decision-making for self-driving cars, each leaf node corresponds to a unique set of conditions regarding the security and risk factors associated with staying in the current lane or swerving to the left lane. This means the decision tree generated 128 ethical rules which can be incorporated into the SDC’s decision-making process. For instance, Rule Number 1 states that if the conditions f4 <= 0.46, f1 <= 0.36, f3 <= 0.56, f2 <= 0.36, and f4 <= 0.16 are met, the decision is to stay in the right lane. Rule Number 40 indicates that if the conditions f4 <= 0.46, f1 > 0.36, f3 > 0.97 and f2 <= 0.36 are met, the decision is to swerve to the left lane.

The distribution of rule depths in the decision tree varies, with the majority of rules concentrated at depths ranging from 6 to 9, as indicated by the frequency counts: 19 rules at depth 6, 27 rules at depth 7, 31 rules at depth 8, and 22 rules at depth 9. This distribution suggests that the decision tree incorporates ethical considerations at multiple levels of complexity, allowing for nuanced decision-making based on the diverse features.

4.2 Features importance

The feature importances —f1 (22.42%), f2 (23.57%), f3 (27.74%), and f4 (26.27%)— extracted from the model suggest a relatively balanced use of the features, with a slight preference for features f3 and f4, which relate to the security and pedestrian risk in the left lane, respectively. Such a distribution supports the model’s capacity to make balanced decisions based on a diverse set of data inputs.

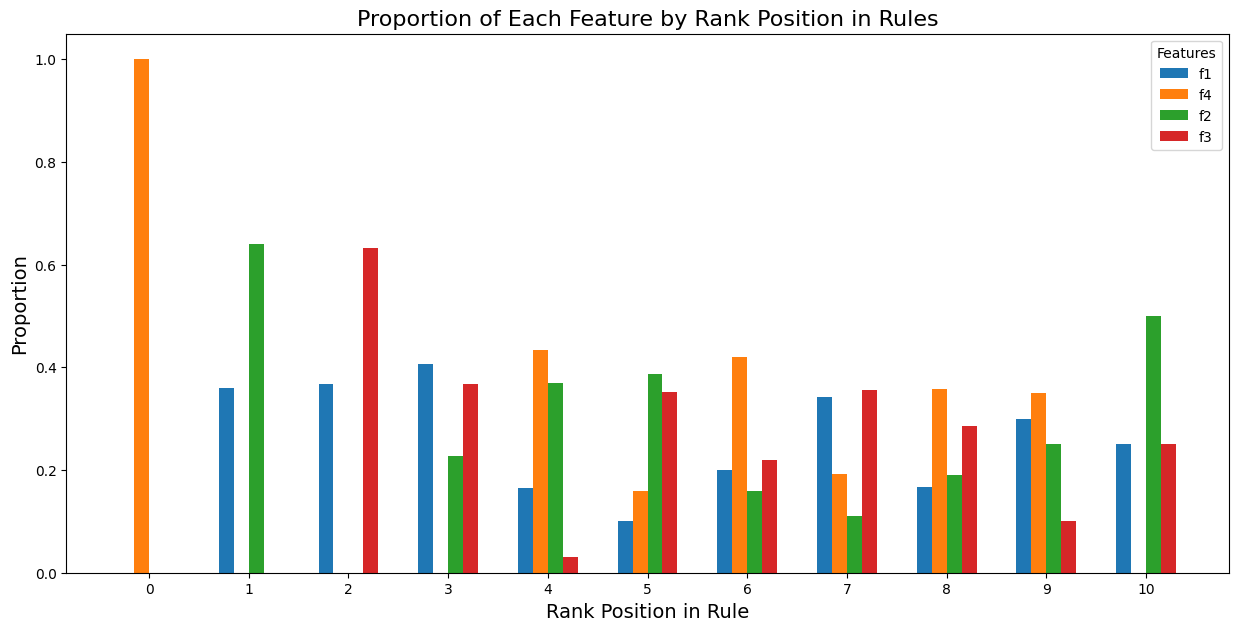

Figure 2: Proportion of Each Feature by Rank Position in Rules

The model’s decision-making is equally split between the decisions to 'stay in the right lane' and 'swerve to the left lane,' with each decision type occurring in 50% of the 128 rules. This balance indicates a model that does not show inherent bias towards one particular action over the other. The analysis of feature importance by rank within the decision rules reveals that at the root node, feature f4 holds total importance, suggesting initial decisions heavily weigh the pedestrian risk in the left lane. As decisions progress deeper into the tree, importance shifts dynamically among the features, reflecting the nuanced ethical considerations required at different decision stages.

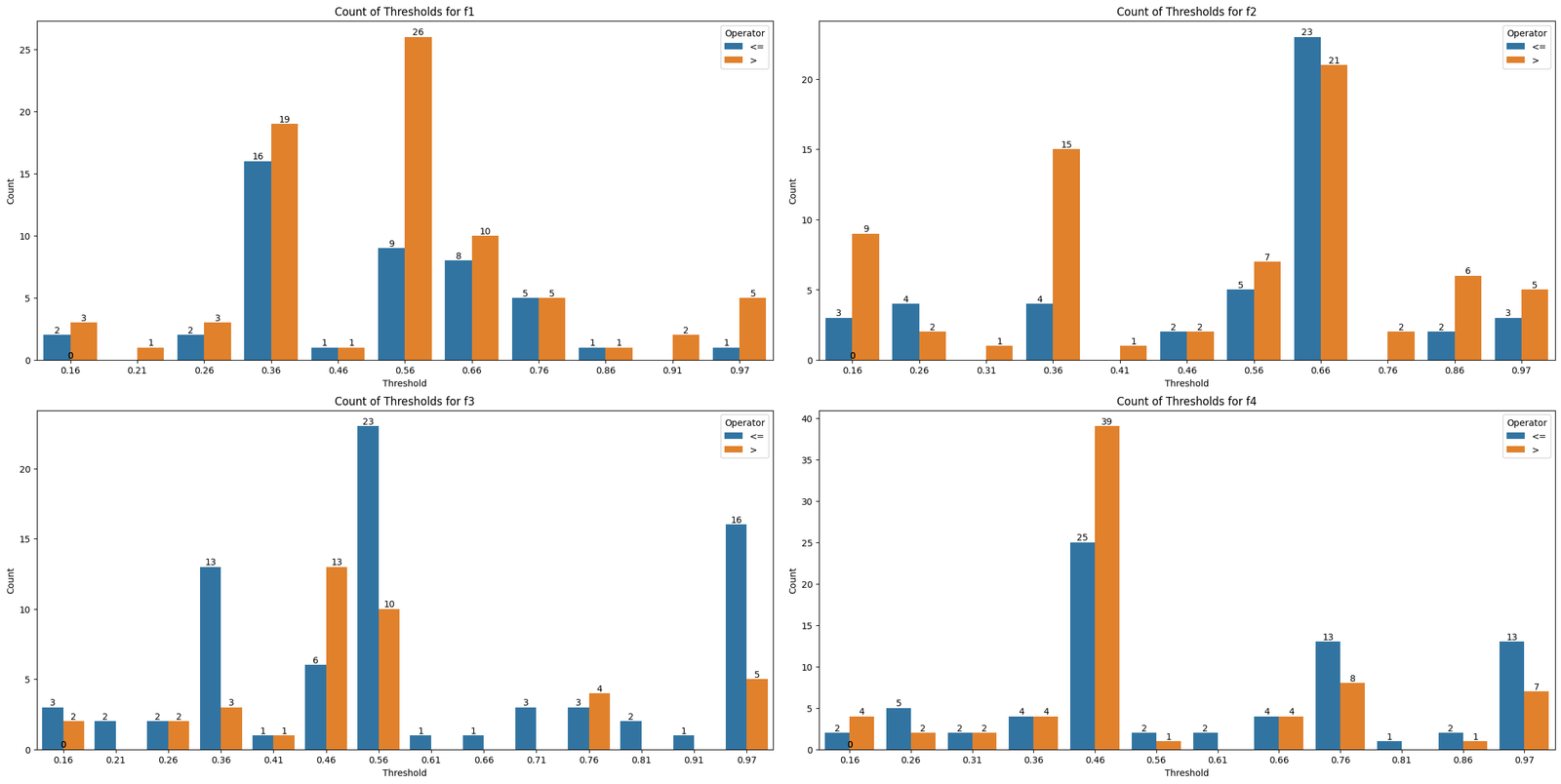

4.3 Decision-Making Mechanisms: Staying in the Right Lane

The decision tree prioritizes staying in the right lane when feature f1 (General Security in the Right Lane) peaks at values greater than 0.56, indicating higher general security. Lower values of feature f3 (Security in the Left Lane), particularly when f3 is less than or equal to 0.56, implying higher risk in the left lane, also support the decision to stay in the right lane. Additionally, even when feature f4 (Pedestrian Risk in the Left Lane) peaks at values greater than 0.46, indicating a lower risk of pedestrian presence in the left lane, the system tends to favor staying in the right lane.

This suggests that the decision to stay in the right lane is influenced by a combination of sufficient security in the right lane and the presence of risks in the left lane, either due to lower security or the potential for pedestrian encounters. The decision tree seems to be calibrated to ensure that the right lane is chosen when it's safe to do so, likely prioritizing the SDC passengers' safety in such scenarios.

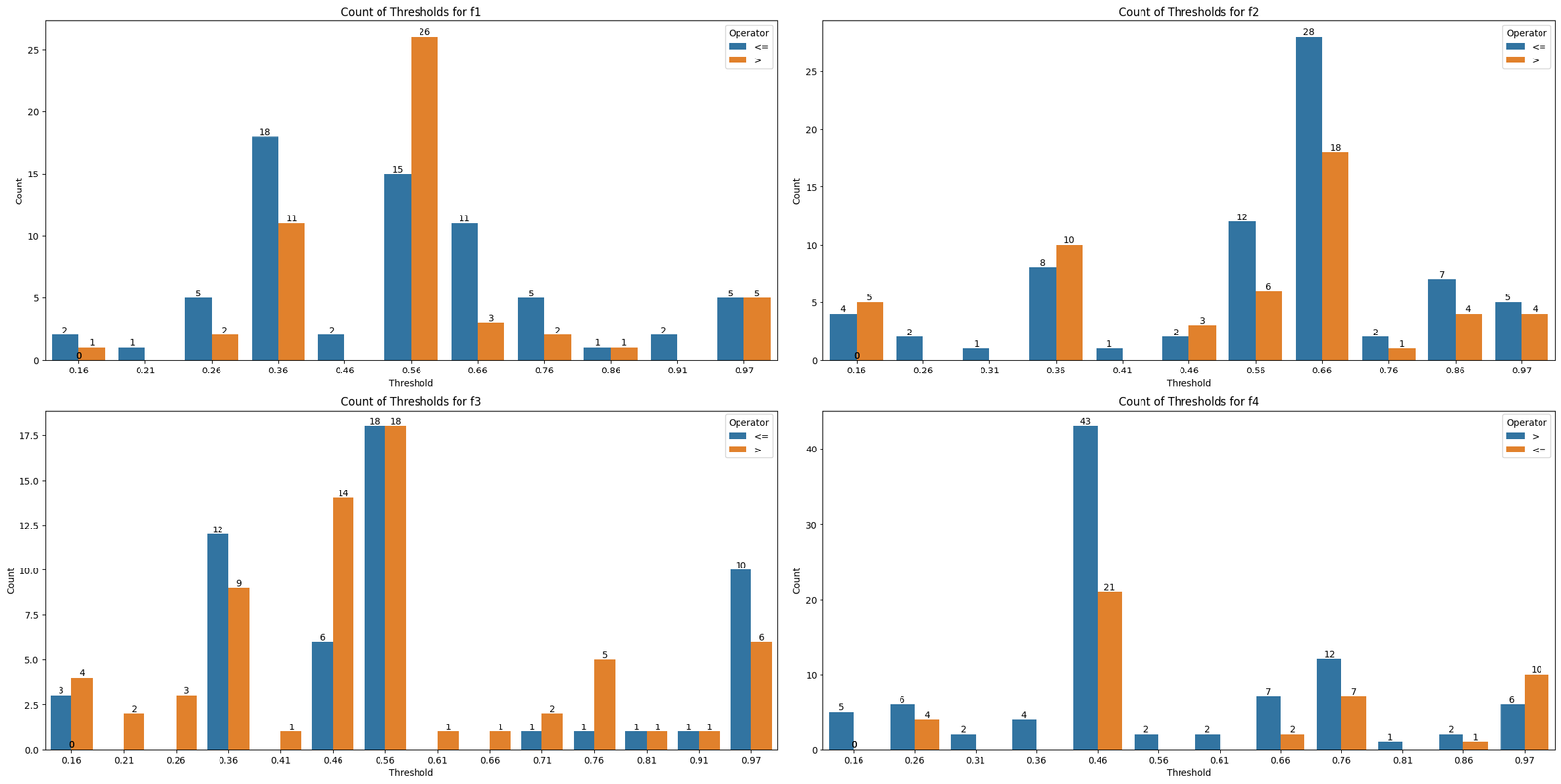

Figure 3: Thresholds and Operators for Staying in the Right Lane Decision-Making

4.4 Decision-Making Mechanisms: Swerving to the Left Lane

The decision to swerve to the left lane is influenced by various factors beyond just the general security of the right lane, as indicated by feature f1 (General Security in the Right Lane) peaking at values greater than 0.56. This suggests that high security alone in the right lane doesn't solely determine the swerving decision. Additionally, feature f2 (Pedestrian Risk in the Right Lane) peaking at values less than or equal to 0.66 implies that the presence of pedestrians or hazards in the right lane significantly influences the decision to swerve leftward, highlighting the importance of pedestrian safety in lane-changing choices. Moreover, when feature f4 (Pedestrian Security in the Left Lane) peaks at values greater than 0.46, indicating moderate security for pedestrians in the left lane, the decision tree tends to favor swerving to that lane, suggesting a preference for pedestrian safety in the decision-making process.

The decision tree's behavior suggests that swerving to the left lane isn't just about the right lane's security. Even when the right lane seems generally secure, the system might still opt to swerve leftward if there's some pedestrian risk. This underscores the importance of pedestrian safety in lane-changing decisions. Moreover, if the left lane offers moderate to high pedestrian security, it's more likely to choose that lane. Essentially, it prioritizes pedestrian safety over staying in the right lane.

These patterns mean a prioritization of pedestrian safety over the simplicity of maintaining the current path, even if that path is generally secure. This reflects an ethical choice within the decision logic, where the system might opt for a maneuver that minimally risks passenger comfort to avoid greater risks to pedestrians, assuming the alternate path is safe.

Figure 4: Thresholds and Operators for Swerving to the Left Lane Decision-Making

4.5 Key Insights

The decision rules derived from global responses provide insight into collective human cognition and moral preferences concerning road safety.

These rules indicate a common tendency to be cautious, preferring to stick to the current lane unless there's a good reason to change. This cautious approach, which prioritizes stability and safety, seems to be influenced by a cognitive bias aimed at ensuring passenger safety. It reflects a driving style preference that focuses on keeping passengers safe. Additionally, the data shows a strong emphasis on pedestrian safety, even if it means sacrificing some convenience for passengers. This ethical standpoint highlights a commitment to protecting vulnerable road users and underscores the system's dedication to safe driving practices while minimizing risks for everyone on the road.

5. Discussion

The aggregation of decisions from diverse global participants has revealed areas of ethical consensus, suggesting that despite cultural and individual differences, there are common ethical principles that people tend to agree upon. The results suggested a global preference for cautious driving, prioritizing passenger and pedestrian safety. This aligns with ethical values, aiming to protect vulnerable road users while ensuring safe driving practices [1]. This consensus is invaluable for establishing international AI ethics guidelines, offering a foundational basis for developing AI systems that adhere to universally accepted moral values. The identification of a global ethical consensus can guide international policy-making and standard-setting for AI ethics. It encourages a unified approach to ethical AI development, ensuring that AI technologies are designed with a common set of moral principles that are respected worldwide.

One of the experiment's key achievements is enhancing the transparency of AI development processes [1, 6, 7]. By engaging the public in ethical decision-making discussions, the initiative helps align AI systems more closely with human values and societal norms, fostering a greater sense of trust in autonomous technologies. Building public trust is essential for the widespread adoption of AI technologies. Transparency in how AI systems make ethical decisions can demystify AI operations, mitigate fears, and encourage public support for AI innovations. It also sets a precedent for involving the public in the ethical development of AI, promoting a participatory approach to AI governance.

While the study has identified areas of consensus, it is also important to incorporate cultural nuances into the ethical programming of AI, acknowledging that ethical judgments can vary greatly across different cultures [1]. Recognizing cultural differences in ethical norms and values suggests a need for AI systems, particularly those deployed in multicultural settings, to be adaptable and sensitive to cultural contexts. This could lead to the development of AI technologies that can adjust their ethical decision-making processes based on cultural considerations, enhancing their global applicability and acceptance.

6. Conclusion and Future Work

The integration of CI into AI ethics, as illustrated by the Moral Machine and its application in developing a decision tree for SDCs, represents a significant step forward the development of morally aware autonomous vehicles. Through the utilization of machine learning, SDCs can be equipped with the ability to make ethically informed decisions in critical situations, reflecting a more universally accepted standard of morality. This approach not only enhances the safety and reliability of autonomous vehicles but also aligns their operation with human ethical principles, paving the way for more socially responsible AI technologies. As we continue to explore this new frontier, the collective wisdom of humanity remains our most valuable guide.

We advocate for the creation of ethical frameworks for AI that are not static but evolve alongside societal values. This dynamic approach ensures that AI technologies remain ethically relevant and responsive to changing societal norms and expectations. Future works can focus on developing adaptive ethical frameworks where AI systems are continuously updated to reflect societal changes, ensuring their long-term alignment with human ethics. This could involve mechanisms for regular public feedback on AI ethics, AI systems that learn and adapt their ethical guidelines over time, and policies that support the ongoing evaluation and revision of AI ethical standards.

References (Selected articles)

[1] A. Gröschel Jr, E. Awad, and J. Schulz, “2018 article the moral machine,” Nature, 01 2018.

[2] I. Coca Vila, “Self-driving cars in dilemmatic situations: An approach based on the theory of justification in criminal law,” Criminal Law and Philosophy, vol. 12, pp. 1–24, 03 2018.

[3] J. bonnefon, A. Shariff, and I. Rahwan, “The trolley, the bull bar, and why engineers should care about the ethics of au-tonomous cars,” Proceedings of the IEEE, vol. 107, 03 2019.

[4] P. Lin, Why Ethics Matters for Autonomous Cars, 05 2016, pp. 69–85.

[5] R. Noothigattu, S. Gaikwad, E. Awad, S. Dsouza, I. Rahwan, P. Ravikumar, and A. Procaccia, “A voting-based system for ethical decision making,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, 09 2017.

[6] S. Arfini, D. Spinelli, and D. Chiffi, “Ethics of self-driving cars: A naturalistic approach,” Minds and Machines, vol. 32, 06 2022.

[7] S. Karnouskos, “The role of utilitarianism, self-safety, and technology in the acceptance of self-driving cars,” Cognition, Technology Work, vol. 23, pp. 1–9, 11 2021.